Tech

Experts Question Impact of Australia’s New Social Media Ban for Children Under 16

Australia has introduced sweeping restrictions that prevent children under 16 from creating or maintaining accounts on major social media platforms, but experts warn the measures may not significantly change young people’s online behaviour. The restrictions, which took effect on December 10, apply to platforms including Facebook, Instagram, TikTok, Snapchat, YouTube, Twitch, Reddit and X.

Under the new rules, children cannot open accounts, yet they can still access most platforms without logging in—raising questions about how effective the regulations will be in shaping online habits. The eSafety Commissioner says the reforms are intended to shield children from online pressures, addictive design features and content that may harm their health and wellbeing.

Social media companies are required to block underage users through age-assurance tools that rely on facial-age estimation, ID uploads or parental consent. Ahead of the rollout, authorities tested 60 verification systems across 28,500 facial recognition assessments. The results showed that while many tools could distinguish children from adults, accuracy declined among users aged 16 and 17, girls and non-Caucasian users, where estimates could be off by two years or more. Experts say the limitations mean many teenagers may still find ways around the rules.

“How do they know who is 14 or 15 when the kids have all signed up as being 75?” asked Sonia Livingstone, a social psychology professor at the London School of Economics. She warned that misclassifications will be common as platforms attempt to enforce the regulations.

Meta acknowledged the challenge, saying complete accuracy is unlikely without requiring every user to present government ID—something the company argues would raise privacy and security concerns. Users over 16 who lose access by mistake are allowed to appeal.

Several platforms have criticised the ban, arguing that it removes teenagers from safer, controlled environments. Meta and Google representatives told Australian lawmakers that logged-in teenage accounts already come with protections that limit contact from unknown users, filter sensitive subjects and disable personalised advertising. Experts say these protections are not always effective, citing studies where new YouTube and TikTok accounts quickly received misogynistic or self-harm-related content.

Analysts expect many teenagers to shift to smaller or lesser-regulated platforms. Apps such as Lemon8, Coverstar and Tango have surged into Australia’s top downloads since the start of December. Messaging apps like WhatsApp, Telegram and Signal—exempt from the ban—have also seen a spike in downloads. Livingstone said teenagers will simply “find alternative spaces,” noting that previous bans in other countries pushed young users to new platforms within days.

Researchers caution that gaming platforms such as Discord and Roblox, also outside the scope of the ban, may become new gathering points for young Australians. Studies will be conducted to assess the long-term impact on mental health and whether the restrictions support or complicate parents’ efforts to regulate screen time.

Experts say it may take several years to determine whether the ban delivers meaningful improvements to children’s wellbeing.

Tech

ESA and GSMA Launch €100 Million Initiative to Advance Europe’s 6G and AI Ambitions

Europe has stepped up its push to lead in next-generation connectivity with a new partnership between the European Space Agency and the GSMA aimed at strengthening 6G and artificial intelligence capabilities through satellite-based communications.

The two organisations announced at the Mobile World Congress a joint funding programme worth up to €100 million to accelerate the integration of satellite and terrestrial mobile networks, known as non-terrestrial networks (NTN). The initiative marks one of Europe’s most significant public investments to date in hybrid satellite-mobile infrastructure.

Antonio Franchi, head of the 5G/6G NTN Programme Office at ESA, described connectivity as the backbone for unlocking advanced technologies. He said the funding would support the development of networks, services and digital tools that could benefit industries and society at large as digital transformation expands.

The programme is open to companies and organisations based in EU member states, which can apply by submitting formal proposals to ESA. Projects will be selected following an evaluation process.

Funding will focus on four core areas: artificial intelligence-driven management of multi-orbit satellite and ground networks; direct-to-device connectivity for smartphones and Internet of Things devices; collaborative 5G and 6G testing platforms; and early research into edge intelligence and advanced IoT systems.

The types of applications envisioned include telemedicine and telesurgery, autonomous driving systems and precision agriculture, all of which depend on reliable, high-capacity connectivity. By merging satellite coverage with mobile infrastructure, the initiative aims to extend high-speed communication even to remote regions.

Alex Sinclair, chief technology officer at GSMA, said combining the mobile industry’s global reach with ESA’s expertise in space technology would help usher in a new era of connectivity and deliver transformative benefits.

The move comes as global competition intensifies in satellite internet and advanced communications, with US companies currently holding a strong position. European officials say the continent’s strength in high-tech manufacturing and specialised software can offer an independent and competitive alternative.

Several European firms are showcasing their work under the programme at MWC, including Nokia, Filtronic, OQ Technology and MinWave Technologies. Demonstrations include live displays of hybrid network architectures and orchestration of satellite-terrestrial systems.

A centrepiece of the exhibition highlights Europe’s space ambitions through a mixed-reality model of ESA’s Argonaut lunar lander, designed to deliver cargo to the Moon. Visitors can remotely operate a training rover via a live satellite link, underscoring how Europe’s connectivity infrastructure is intended to support not only terrestrial innovation but also future lunar missions.

Tech

Mobile World Congress Opens in Barcelona With Focus on AI and 5G Concerns

Tech

Transatlantic Tensions on Digital Rules Highlight Need for Cooperation

Discussions between Europe and the United States over digital regulation continue to be marked by miscommunication and frustration, even as competitors observe from the sidelines. Europeans and Americans talk past each other while rivals watch. The European Union can set its own standards, but in an interconnected economy, decoupling fantasies and grandstanding won’t help.

The debate often centres on “free speech” concerns voiced by U.S. tech companies and policymakers in response to the EU’s legislative framework for digital platforms. In Europe, such narratives typically prompt defensive reactions. Some Europeans respond with a blunt message: “This is our land, our Union, our laws, follow them, or leave the EU—we’ll find alternative products to use!” Public awareness of American constitutional amendments is low across Europe, just as Americans pay little attention to European digital acts and regulations.

The transatlantic dialogue is further complicated by the global nature of social media platforms. Any EU legislation affecting user experience inevitably influences the functioning of these platforms worldwide, touching on what Americans see as free speech rights. The EU also seeks to extend its influence through the “Brussels effect,” ensuring that European rules shape global standards, while the U.S. maintains a large trade surplus in services and competes technologically with China. This mix of economic, political, and regulatory factors explains why U.S. attention is sharply focused on Europe’s digital policies.

Europeans argue that their 450-million-consumer market has the right to set rules that reflect local principles and values. Attempts to adjust or simplify regulations are difficult, with efforts often met with political resistance and scrutiny. The regulatory ecosystem in Europe supports industries of lawyers, consultants, and experts whose work depends on maintaining complex rules, making reform a sensitive topic.

On the American side, anti-EU rhetoric by public figures has sometimes compounded the problem, drowning out moderates and reinforcing defensive European responses. Analysts note that both regions have seen productive voices sidelined as grandstanding and negative statements dominate public discourse.

Observers argue that long-term thinking is necessary. By evaluating the EU-U.S. tech partnership in the broader context of global alliances, including China and Russia, policymakers can better assess priorities and avoid unnecessary disruption. Blank-slate decoupling between Europe and the United States is unrealistic, and delaying constructive dialogue risks broader economic consequences.

Experts warn that continued transatlantic infighting benefits other global powers and weakens the ability of both regions to set coherent standards in emerging technologies. The message from analysts is clear: cooperation, not confrontation, will determine whether the EU and U.S. can maintain leadership in digital regulation while safeguarding economic and technological interests.

-

Entertainment2 years ago

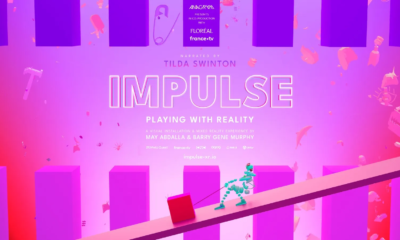

Entertainment2 years agoMeta Acquires Tilda Swinton VR Doc ‘Impulse: Playing With Reality’

-

Business2 years ago

Business2 years agoSaudi Arabia’s Model for Sustainable Aviation Practices

-

Business2 years ago

Business2 years agoRecent Developments in Small Business Taxes

-

Home Improvement1 year ago

Home Improvement1 year agoEffective Drain Cleaning: A Key to a Healthy Plumbing System

-

Politics2 years ago

Politics2 years agoWho was Ebrahim Raisi and his status in Iranian Politics?

-

Business2 years ago

Business2 years agoCarrectly: Revolutionizing Car Care in Chicago

-

Sports2 years ago

Sports2 years agoKeely Hodgkinson Wins Britain’s First Athletics Gold at Paris Olympics in 800m

-

Business2 years ago

Business2 years agoSaudi Arabia: Foreign Direct Investment Rises by 5.6% in Q1